Facebook’s extended efforts to be claimed as something other than the largest misinformation spreader, it is working on several strategies such as from spinning its misleading PR narratives to actual UI changes. Now, Facebook plans to penalize its users who repeatedly share misinformation. The new warnings will notify users that if they repeatedly share false claims, their posts will be moved lower down in News Feed so that it is approachable to fewer people.

It’s an extension of the Facebook fact-checking policies, which have met with considerable skepticism since the company launched them back in 2016 in which Facebook used to down-rank individual posts that were debunked by fact-checkers, but the posts have gone viral before they were reviewed by fact-checkers, and there was a little warning for users to not share these posts in the first place. With the change, Facebook says it will warn users about the consequences of the same.

“Starting today, we will reduce the distribution of all posts in News Feed from an individual’s Facebook account if they repeatedly share content that has been rated by one of our fact-checking partners,” the company wrote in a press release.

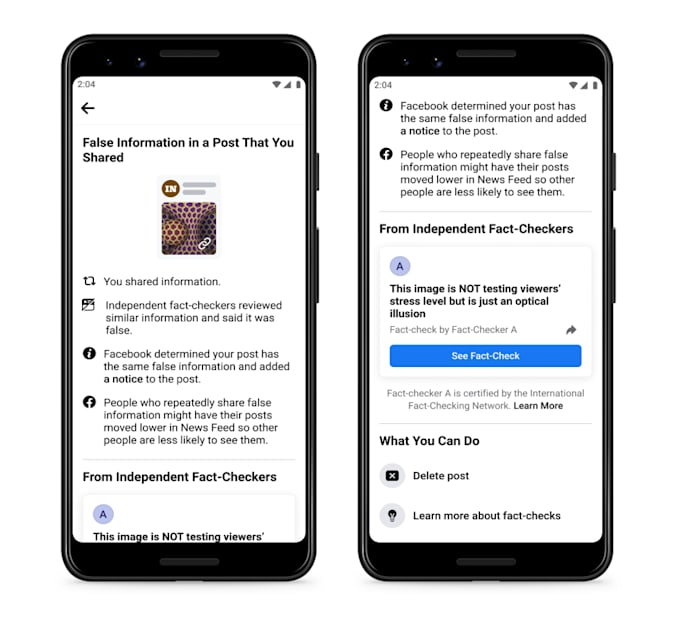

Pages that violate the policy repeatedly, Facebook will include pop-up warnings when new users try to follow them. Individuals who regularly share misinformation will receive notifications that their posts may be less visible in News Feed. The notifications would also include a link to the fact check for the post in question, and allow the users to delete the post.

“It also includes a notice that people who repeatedly share false information may have their posts moved lower in News Feed so other people are less likely to see them,” added Facebook.

The update becomes immensely important after a year when Facebook was not able to control viral misinformation about the coronavirus pandemic, the presidential election, and COVID-19 vaccines. “Whether it’s false or misleading content about COVID-19 and vaccines, climate change, elections, or other topics, we’re making sure fewer people see misinformation on our apps,” the company wrote in a blog post.

Facebook didn’t confirm how many posts it would take to trigger the reduction in News Feed, but the company has already used a similar “strike” system for pages that share misinformation.

Researchers found misinformation is often shared by the same individuals behind the most viral false claims. Now, its effectiveness will depend, on how open people are to believe the debunking.

1 Comment

Pingback: Facebook Will No longer Remove Posts Claiming The 'Covid-19 is Man-Made' - Craffic