Update: Today, Nvidia has officially announced the A100 80GB PCIe GPUs with “increased GPU memory bandwidth 25 percent compared with the A100 40GB, to 2TB/s, and provide 80GB of HBM2e high-bandwidth memory.”

NVIDIA partner support for the A100 80GB PCIe includes Atos, Cisco, Dell Technologies, Fujitsu, H3C, HPE, Inspur, Lenovo, Penguin Computing, QCT, and Supermicro. The HGX platform featuring A100-based GPUs interconnected via NVLink is also available via cloud services from Amazon Web Services, Microsoft Azure, and Oracle Cloud Infrastructure.

Original Article: According to a listing spotted by Videocardz, Nvidia is planning to launch an 80GB model of A100 PCIe connector as soon as next week. Currently, the 80GB variant is only available with the SXM connector.

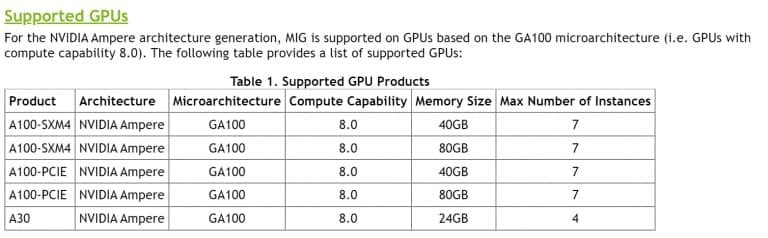

Last year in June, Nvidia launched the A100 HPC accelerator – its fastest and biggest GPU – which features 54 billion transistors on an 826mm2 die combined with 40GB or 80GB of HBM2e memory and is offered in two variants – SXM4 connector or a standard PCIe 4.0. But now it seems Nvidia is planning to give A100 a major upgrade in terms of specifications.

As reported by Videocardz, the new 80GB model of A100 PCIe GPU accelerator (which is not official just yet) doesn’t change much in terms of core configuration but due to 80GB of HBM2e memory, it can deliver higher bandwidth of 2.0 TB/s compared to 1.55 TB/s of 40 GB variant. Nvidia is reportedly planning to launch it next week and could price at $20,000 US or above.

However, there are only three variants of the A100 accelerator that are available officially which include 40 and 80GB models of SXM connector and 40GB model of PCIe connector.